Stable Neural Interfaces Through Artificial Intelligence-based Manifold Discovery

Chethan Pandarinath, Department of Biomedical Engineering

Emory University

People: Xuan Ma, Fabio Rizzoglio

Neural decoders translate brain activity into movement intent to control devices or move paralyzed limbs using functional electrical stimulation (FES). However, due to neural interface instabilities, the recorded neurons change over hours to days. Current standard practice is decoder recalibration at least once and even multiple times per day. Such constantly changing decoders also require users to adapt, as though the feel of a tool changed each day. It is also a significant burden, interrupting device use and typically requiring third-party intervention. Thus, maintaining stable neural decoders is a critical challenge for manipulating neural interface systems in practical conditions.

We have recently observed that the mapping from low-D neural manifolds to behavior can be invariant for months and even years, robust to turnover in the particular recorded neurons[1]. Some other recent studies have also shown that low-D manifolds allow for more stable neural decoding[2]. However, most attempts, including our recent published results, have used simple linear methods for manifold discovery (e.g., PCA, factor analysis, or linear dynamical systems), which are grossly underpowered in comparison to modern machine learning.

In this project, directed by Dr. Chethan Pandarinath at Emory University, we will develop an AI-based platform to uncover low-dimensional manifolds from neural activity and adjust to neural interface instabilities. Our platform for Nonlinear Manifold Alignment Decoding (NoMAD) will combine two state-of-the-art AI-based approaches, both developed by our groups: 1) Latent Factor Analysis via Dynamical Systems (LFADS)[3], uses recurrent neural networks (RNNs) to precisely uncover low-D manifolds from neural population activity. 2) Adversarial Neural Manifold Alignment (ANMA)[4], uses adversarial networks to align new data from an unseen neural population onto a previously determined manifold. Because LFADS and ANMA are unsupervised, they do not require data collected explicitly for recalibration. This will allow NoMAD to provide automated alignment of changing neural data to achieve stable decoding that is independent of neuronal turnover, without interrupting device use. We expect further improvement with NoMAD, aiming to achieve stable decoding for 6-24 months with no detectable performance loss vs. daily recalibration.

NoMAD will be applicable to a wide variety of neural interfaces, as manifolds have been discovered across neural systems (motor, sensory, cognitive)[5]. Therefore, besides the control of external devices for motor function reconstruction (e.g., robotic arms, communication, and FES of paralyzed limbs), NoMAD also has the potential to improve therapies for Parkinson’s, epilepsy, speech, depression, or psychiatric disorders.

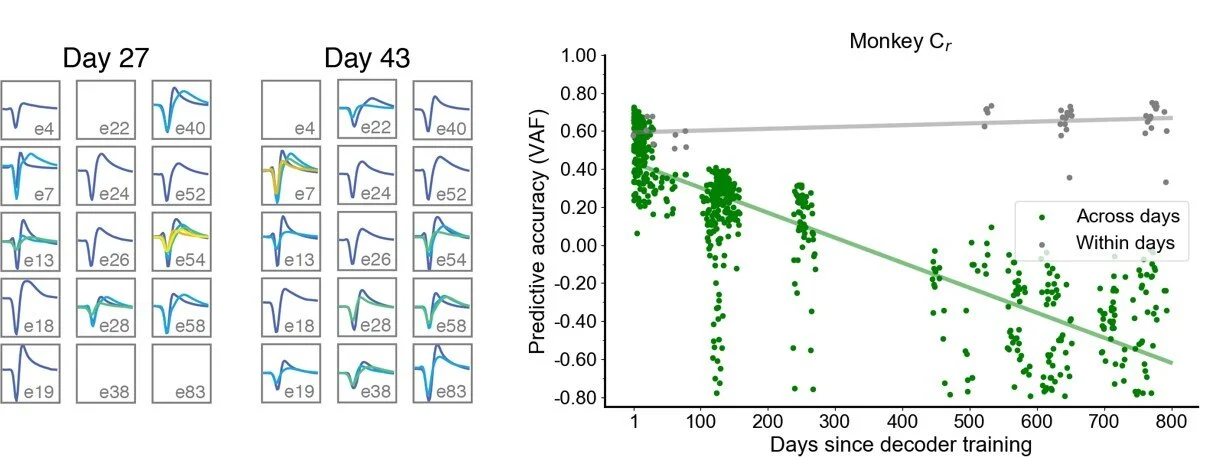

Fig. Instability: Left: the average action potential waveform of example sorted neurons for two datasets: Day 27 and Day 43. Right: prediction performance using a fixed BMI decoder. Gray symbols represent the sustained performance of the decoder retrained on a daily basis. Green symbols illustrate the deterioration in performance of a fixed decoder.

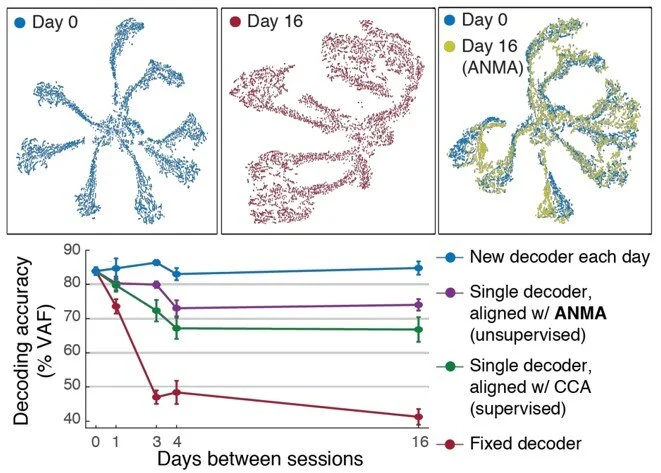

Fig. Manifold Alignment: Top: Latent variables on day-0. Latent variables on day-16, before alignment. Latent variables on day-16, after alignment to those of day-0. Bottom: EMG prediction performance using a fixed BMI decoder. Blue symbols represent the sustained performance of interfaces retrained on a daily basis. Red symbols illustrate the deterioration in performance of a fixed interface without domain adaptation. The performance of a fixed interface after domain adaptation is shown for CCA (green), and ANMA (purple). Error bars represent standard deviation of the mean.

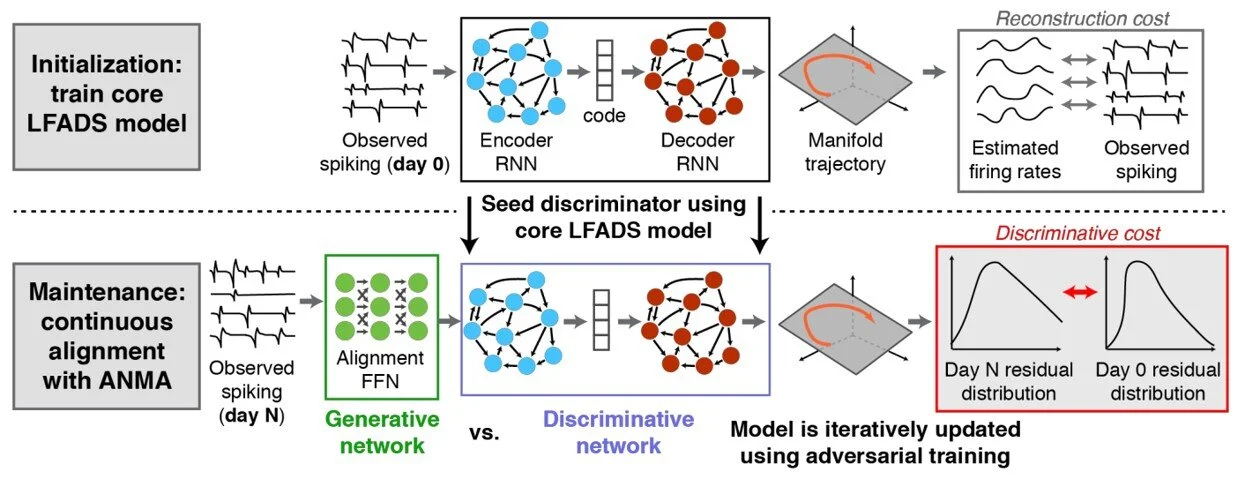

Fig. NoMAD Arch: Architecture underlying the NOMAD approach. First, a core model (LFADS) is trained on an initial training dataset. The model’s stability is maintained by applying the ANMA approach to new data in batches, as it comes in. In this approach, the distribution of values traversed by the manifold is assumed to be consistent over time. Changes in this distribution are corrected by an alignment network (green), a feedforward network whose objective is to minimize differences in the distribution between the training data and new data that is subject to nonstationarities. During the maintenance process, the original network’s weights are fixed, and only alignment weights are learned.

Citations:

[1] Gallego, Juan A., Matthew G. Perich, Raeed H. Chowdhury, Sara A. Solla, and Lee E. Miller. "Long-term stability of cortical population dynamics underlying consistent behavior." Nature Neuroscience (2020): 1-11.

[2] Nuyujukian, Paul, Jonathan C. Kao, Joline M. Fan, Sergey D. Stavisky, Stephen I. Ryu, and Krishna V. Shenoy. "Performance sustaining intracortical neural prostheses." Journal of Neural Engineering 11, no. 6 (2014): 066003.

[3] Pandarinath C, O’Shea D J, Collins J, et al. Inferring single-trial neural population dynamics using sequential auto-encoders. Nature Methods, 2018, 15: 805–815.

[4] Farshchian, Ali, Juan A. Gallego, Joseph P. Cohen, Yoshua Bengio, Lee E. Miller, and Sara A. Solla. "Adversarial domain adaptation for stable brain-machine interfaces." ICLR (2018).

[5] Gallego, Juan A., Matthew G. Perich, Lee E. Miller, and Sara A. Solla. "Neural manifolds for the control of movement." Neuron 94, no. 5 (2017): 978-984.